Embeddings

Embeddings are vectors representing entire documents or fragment of them into a continuous vector space. This numerical representation of is required to efficiently infer similitudes between documents and implement features like Semantic Search.

This means that LogicalDOC must calculate all these embeddings for the documents in your repository and save them into the Vectors Store, whose setup is a requirement.

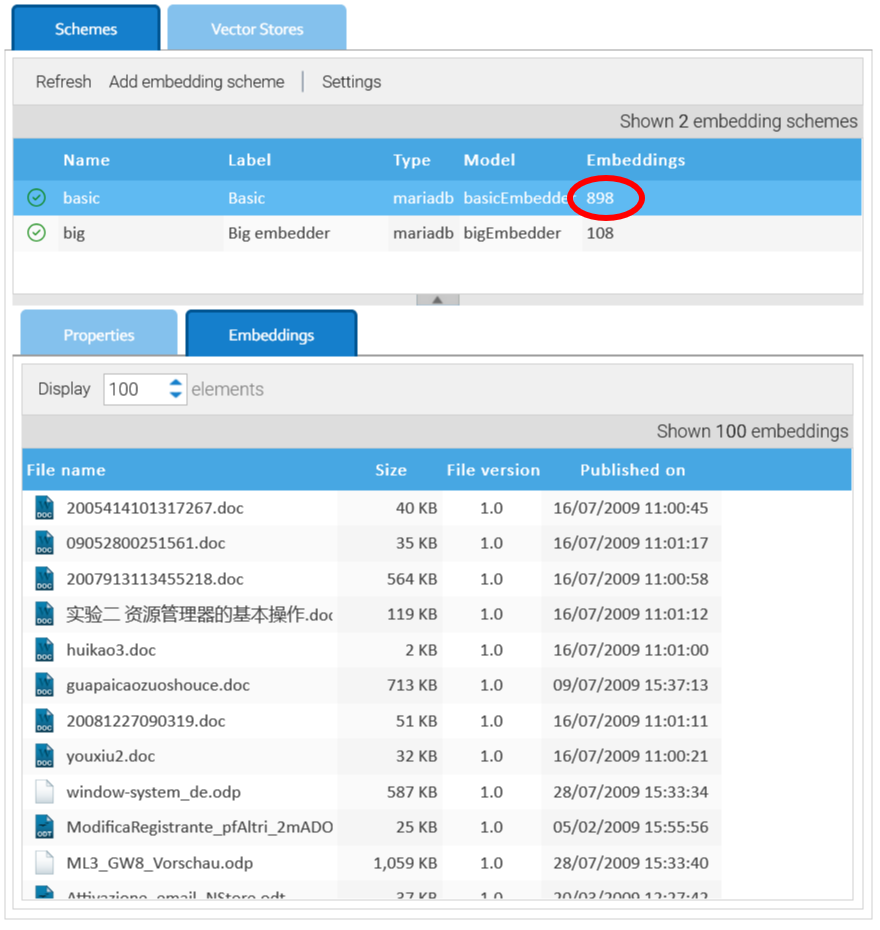

Embedding Schemes

The process of calculating an embedding of a document is not unique, but depends on what embedding model you use.

In Administration > Artificial Intelligence > Embeddings, you can handle different embedding schemes, each one telling LogicalDOC how to process the documents with a specific embedding model.

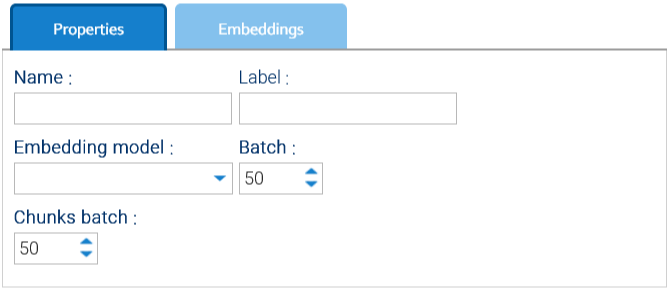

When you create a new scheme by clicking on Add embedding scheme, you will be required to specify one of the available embedding models.

At the time of writing, you can choose among the Embedder models directly coded in LogicalDOC itself or one of the embedding models available in ChatGPT.

Other common settings are:

- Batch: Number of documents processed at the same time

- Chunks batch: How many chunks gets added into the vector store at the same time

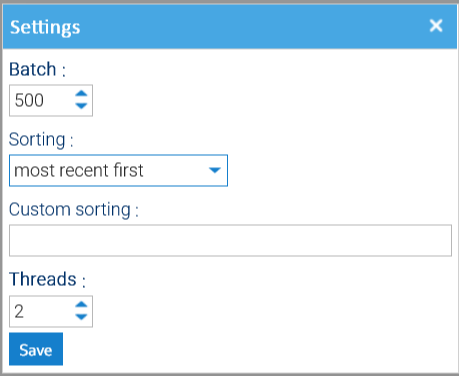

Background Processing

Like the full-text indexing, even the calculation of the embeddings is very CPU intensive and so it gets carried out by the scheduled task Embedder.

Click on the Settings button to see some configuration parameters that regulate how the task works.

As vectors are calculated and saved into the vector store, you can see this in the counter and in the Embeddings panel.